Textures

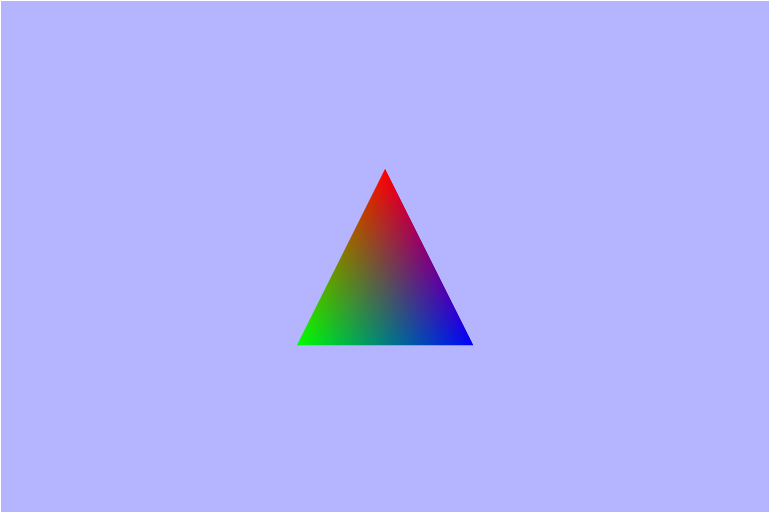

What are textures and how do we use them? Well to get there, let's first take a look back to the way we have been adding color to the objects from our previous scenes. Take for example the triangle from Weeks 7 & 8: The Rasterization Pipeline.

3 color gradient

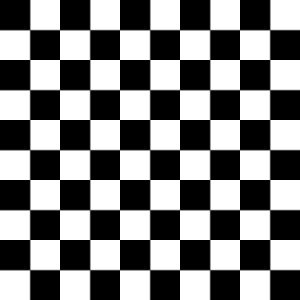

Now, this triangle does clearly have more than 3 colors on it, but it did not start that way. We started defining 3 colors (one for each vertex); one red, one blue, and one green vertex. Then in the fragment shader each fragment got its color as an interpolation of the color and distance to each vertex. That interpolation is what provides that nice gradient/blending effect. Gradients are one coloring pattern, along with solid colors, that lend themselves quite nicely to this interpolation method. So what if we wanted to make a more complicated pattern. Say maybe we wanted to make a checkerboard pattern on some sort of rectangular geometry.

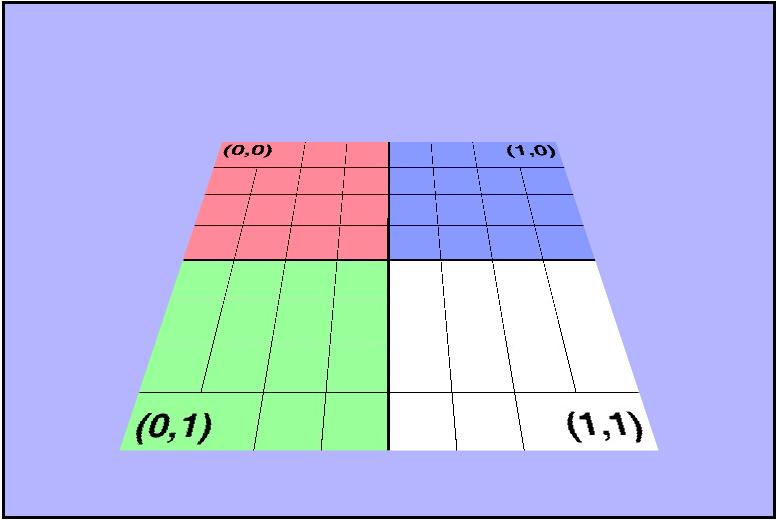

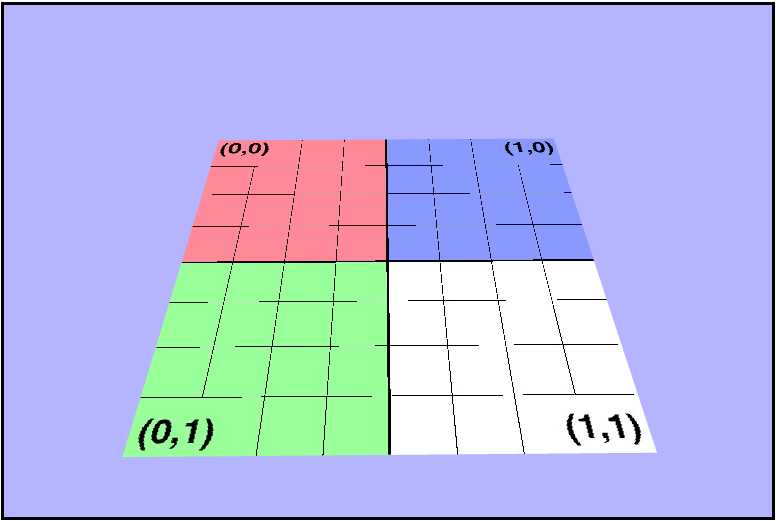

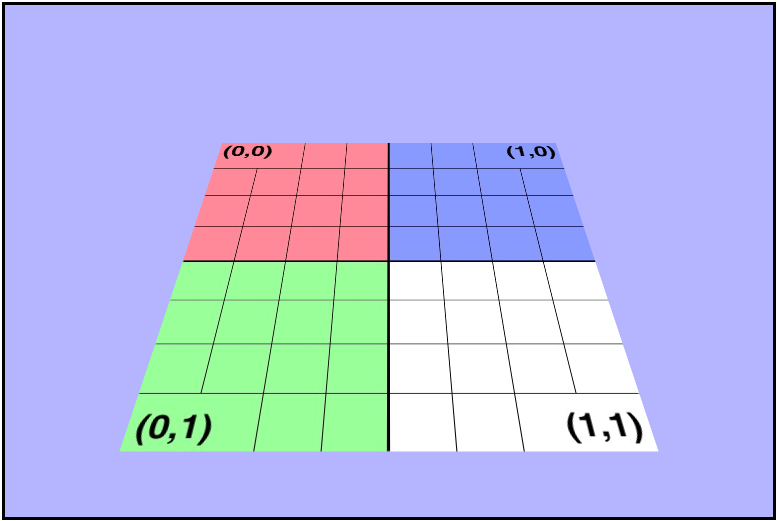

How would we go about doing that using the intperpolated value from each vertex? Well we would probably have to break this quad into $100$ smaller quads (since it's $10\times 10$), instead of trying to shade using the single quad. One thing to keep in mind though is that each quad is going to be rendered as $2$ triangles, so in reality we would go from $2$ triangles to $200$!! Now just imagine if we had an open world game and we wanted to render some dry grass in one of the areas.

Mr emoji up there says it all. That is one monumental task that we shouldn't try attemping in the old way, so it looks like we need a better way to do it. Textures to the rescue!! Back to the original question: What are textures? Well, textures are just images, but more generally they are a set of color $(Red, Blue, Green)$ vectors aranged in a grid. Each individual color vector is called a texel Using textures we can much more realism and detail to our scenes than before without the massive overhead costs of adding a ton of extra geometry.

Texture Mapping

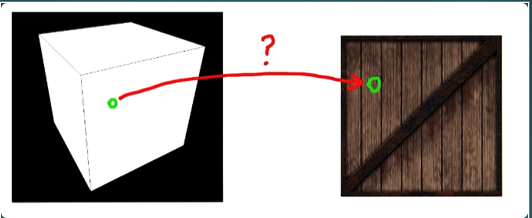

Now that we know what textures are, let's take a look at how we can use them to enhance the visuals of our scenes. To use a texture on an object in our scene, we can use a method called Texture mapping. Texture mapping allows us to take a $2D$ image and place it on (map it to) a $3D$ model in our scene.

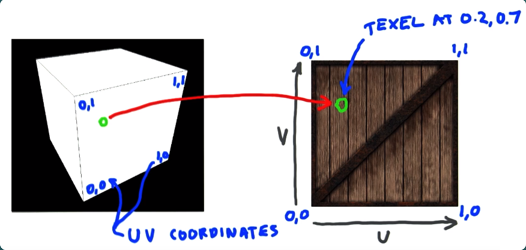

Since textures are $2D$ grids, we need two coordinate axes to define any single texel, and the most common

way is using $(u,\ v)$ coordinates; the other common set is $(s,\ t)$ coordinates. Great, we now have a

way to address each texel, but that still leaves the question: How exactly do we define which texel goes

where on the object? Well, let's start with a simple case where the texture is One-to-one with the

object. That means that every texel on the texture maps to only one single pixel on the object.

** Aside **

One thing that is very important in computer graphics is the number range $[0,\ 1]$, and basically it's

just easier to work when things are normailzed that way, and it makes scaling the texure simpler. So we'll

*want* to remap our $(u,\ v)$ coordinates to be in the range of $[0,\ 1]$. However, it is NOT

required to be in this range.

** End Aside **

In this case let's assume we have one texel per pixel, so each pixel should be getting it's correct

value. We need to define the range (normalized to $[0,\ 1]$ here), and then we take the corresponding

RGB values for each texel coordinates and apply it to each object pixel.

But where is that?

Ah-ha!

Now let's take a more complex example: mapping a texture onto a triangle. Here we don't have a nicely defined mapping, but we can just specify the $(u,\ v)$ coordinates for each vertex and let our old friend interpolation take over for the rest.

This process can also be done in reverse where a colored $3D$ model is decomposed into flat images thru a process known as $uv$ unwrapping. This is usally done bby an artist using some digital content creation tool like Blender.

UV Unwrapping

Texture Filtering

In the previous example of mapping a texture on to a triangle, we could see that the verticies didn't line up. I also said that interpolation will take over. There are many ways to configure how the interpolation will occur, but more generally the case where there is not a $one-to-one$ relationship between the texels (of the texture) and the pixels (of the object) we need to apply what is called filtering. The two most common forms of filtering are magnification and minification.

Magnification

Magnification occurs when the texture has a higher lower than we are rastering. In other words, there are more pixels than there are texels. This causes single texels to be shared across multiple pixels, and a common place for this to occur is when a camera gets very very close to an object in the scene. This also has the problem of: How do we determine which color to give to the pixels? Because there may not be a clear way to sample texels for each pixel.

Minification

Minification is essentially the opposite of magnification and occurs when we have more texels than pixels. It causes a loss of resolution because multiple texels are now mapping to a single pixel, and just like magnification we need to find some form of filtering that fits our needs.

Mipmaps

We would like to have nice mappings for our textures, but it is almost never the simple $one-to-one$ case. We need a way to approach minification besides just blending, and that's where mipmaps come in. Mipmaps are smaller, pre-filtered versions of images, and they are one of the main reasons that graphics api's like to have textures with power of two dimensions. They are often reductions in size by powers of two for the texture. They represent different amounts of detail and allow us to have a better idea of what color we really want to map to each pixel, and they can often be auto-generated, from the base image, using the api. They find their use in situations where an object's texture needs to be minified, and will increase the image quality of things rendered father away from the camera.

Mipmaps

Types of Filtering

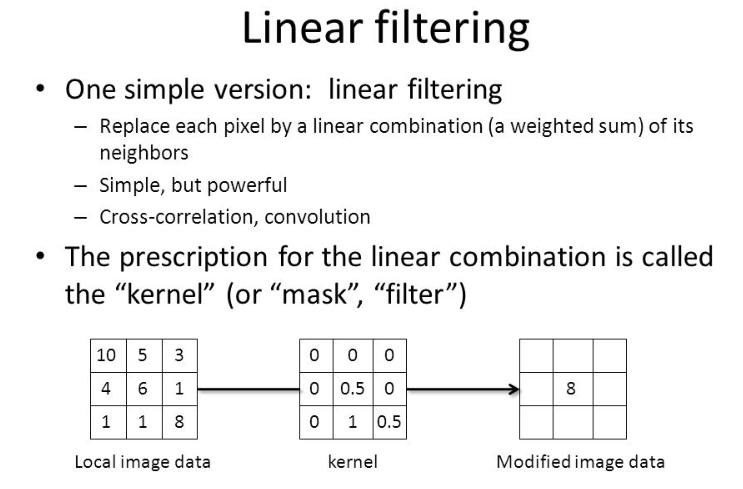

The first and most intuitive form of filtering is the Point Sampling or Nearest Neighbor filtering. This method works to determine the color for each pixel by using the color value from the closest texel. Point sampling is very good at preserving shard edges in textures, so it is often the sampling method of choice for pixelated art, but it doesn't provide smooth transitions which is where the linear (and it's inherited forms) filtering thrive.

Linear Filtering is a method which attempts to provide smooth transitions between colors by determining the value for a pixel using a linear interpolation of it's $4$ nearest neighbors' color values. Linear filtering is aimed to quickly provide a realively smooth guess at what color we want, but it pales in comparison to it's derived methjods in terms of image quality.

Bilinear filtering is the next filtering, and as the name suggests, it is performing linear filtering in two directions. It actually performs $3$ total linear interpolations, but two of them are along the same axis. Bilinear filtering, as with linear filter, cause rough edges to become smooth and blended, so it is not a good choice for something with sharp defined edges.

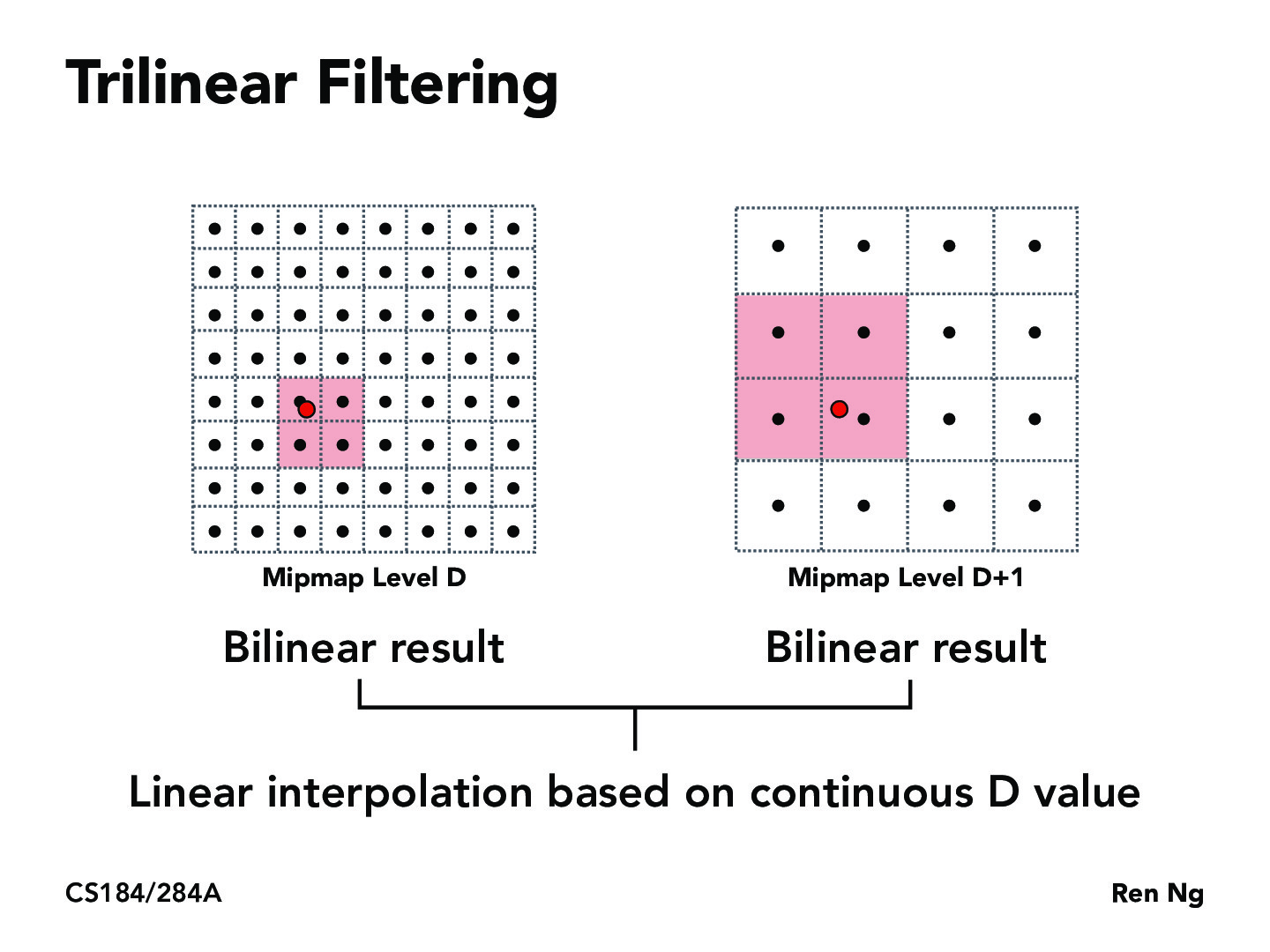

Trilinear filtering is the last filtering mode I'll cover here, but there are many more (over a dozen at least). Trilinear filtering is not just another dimension of linear filtering, but rather it is a linear interpolation over two bilinear interpolations. It works by taking the $2$ closest Mipmap to the wanted resolution, performs bilinear filtering on both, and then it performs one final linear interpolation between the two results. This method allows for much smoother transitions between mipmap levels.

Transparency and Alpha Blending

Let me start by defining alpha (α). Alpha is the opacity value for a color and represents how much light passes thru the pixel. An alpha value of $1.0$ means an object is fully opaque and no light goes thru it, and an alpha value of $0.5$ means that $50%$ of light will pass thru that object. Now that we know what alpha is let's explain why and how we want to use it. Alpha is used to show transparency in objects thru it's builtin color channel.

The idea of what we want in alpha blending it to somehow sample the alpha values for a pixel. So, as we do so often in graphics, we look to our best friend linear interpolation. This is not a perfect solution, and it will require some additional things, like the painter's algorithm to ensure that translucent objects don't occlude other objects behind them. But it's does allow for a real-time, photorealistic (I think) representation of opacity in objects

This interpolation would be used to apply $c_{fragment}$ over $c_{buffer}$, but there are many other ways to blend the alpha.

Creating the Scene

As in our previous scenes, we start out with a canvas filled with that nice blue-ish color and nothing seemingly in the scene. Well, there's really a quad in there meant to be the ground, but we just can't see it because or camera is sitting at the origin and the quad isn't in our veiwing volume. To remedy this, we need to send the proper view matrix to the vertex shader. If you remeber back a few weeks to Weeks 7 & 8: The Rasterization Pipeline, we learned that the view matrix is really the inverse of the camera's world matrix. So within the camera object let's define a function to get that matrix for us.

this.getViewMatrix = function() {

return this.cameraWorldMatrix.clone().inverse();

}There it is!

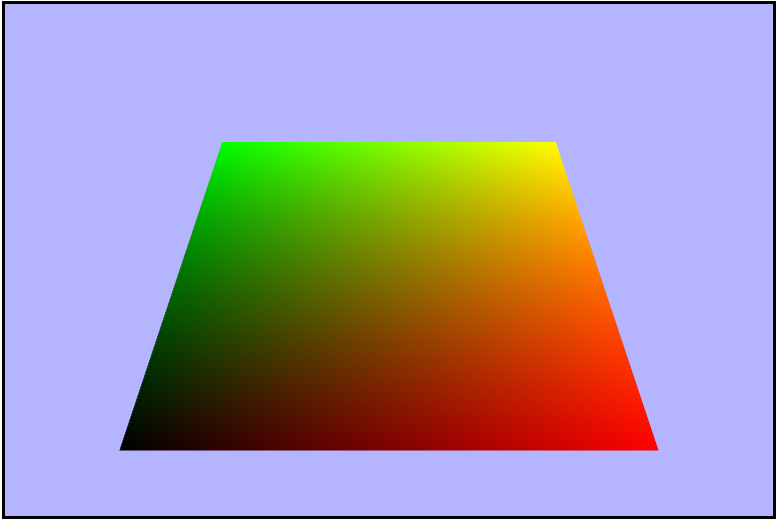

Coloring with $uv$ Coordinates

Right now the ground is completely black, so let's add some color to it. We'll start by using the $uv$

coordinates as the Red and Green values for each fragment. To do this, we need to do a couple of things

in our shaders. First, in the vertex shader, we need to recive the texture coordinates from the gl context

as an attribute (vertex shader only), and then we need to send them to the fragment shader using

a varying since it's interpolated for each fragment. Last important piece to mention is that the

texture coordinates are represented as a

vec2 because there's two values

associated

with each one, and also in GLSL there is not a

$uv$ vec2, so we'll have to

use $x$ and $y$ for $u$ and $v$ respectively.

attribute vec2 aTexcoords;

...

varying vec2 vTexcoords;

void main(void) {

vTexcoords = aTexcoords;

...

}Then within the fragment shader we can use the coordinates to set te color of each pixel.

...

varying vec2 vTexcoords;

void main(void) {

gl_FragColor = vec4(vTexcoords.x, vTexcoords.y, 0.0, uAlpha);

}Red/Green Gradient

Adding the Texture to the Quad

Now that we are properly sending the $uv$ coordiantes to the shaders, it's time to tackle the beast that is applying/sampling a texture to the quad. There's a whole lot going on here, but a quick summary would be: create/bind a texture to the gl context, load the image, rebind the texture, set the wrap modes, set the filtering modes, and send it to the the gl context. Wow, let's slow this down a bit. Just as most buffered streams of data in webgl, we will need to allocate some space in VRAM to hold our data. Then we will need to create a new Image object to hold the image data in the RAM for us. As with most things in javascript it is event driven, so we will need to set up a listener to tell us when the image is ready. Once we have our image, we can then go about binding the texure to it and linking our color data to the buffer in VRAM. Then we need to flip the $y-axis$ of the image because the browser loads it with the origin in the bottom left corner, but WebGL wants the origin in the top left corner. Now it's time to set the wrap modes. Note that WebGL uses the $st$ coordiantes instead of $uv$ (just semantics, but important to pay attention to). When I started off I used the clamp to edge mode, which didn't affect anything until I startd animating the texture which we'll talk about later. Once we told gl how to wrap, we can tell it how to handle mag/minification. Let's start off by using the nearest filtering mode and in just a minute we'll see why that wasn't the right choice in this situation. Lastly we send the image data over to VRAM so webgl knows about it.

if (rawImage) {

// 1. create the texture allocating space in VRAM

this.texture = gl.createTexture();

// JS image object

const image = new Image();

// We don't know when our image will be ready, so let's only continue when it is

image.onload = () => {

// 2. bind the texture

this.gl.bindTexture(gl.TEXTURE_2D, this.texture);

// load the image into a texture

this.gl.texImage2D(gl.TEXTURE_2D, // texture type

0, // level of detail (mipmap number)

gl.RGBA, // internal format - color components of the texture

gl.RGBA, // format - must be the same as internal format

gl.UNSIGNED_BYTE, // type of data (8 bits/channel for RGBA)

image); // pixel source data

// needed for the way browsers load images

// flips the y-axis to have the origin in the top left

this.gl.pixelStorei(gl.UNPACK_FLIP_Y_WEBGL, true);

// textParameteri send a texture parameter with and integer value to the gl context

// 3. set wrap modes (for s and t) for the texture

this.gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_WRAP_S, gl.CLAMP_TO_EDGE);

this.gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_WRAP_T, gl.CLAMP_TO_EDGE);

// 4. set filtering modes (magnification and minification)

this.gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_MAG_FILTER, gl.NEAREST);

this.gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_MIN_FILTER, gl.NEAREST);

// 5. send the image WebGL to use as this texture

// the 2nd arg sends the id of the texture buffer (targeting TEXTURE0)

this.gl.uniform1i(textureShaderProgram.uniforms.textureUniform, 0);

// We're done for now, unbind

this.gl.bindTexture(gl.TEXTURE_2D, null);

};

// set the image source data to be the raw data passed in

image.src = rawImage.src;

}Then when we are rending the quad, we will have to activate and re-bind the texture.

if (this.texture) {

this.gl.activeTexture(gl.TEXTURE0);

this.gl.bindTexture(gl.TEXTURE_2D, this.texture);

}

...

// draw the quad

...

// unbind it since we are done for right now

gl.bindTexture(gl.TEXTURE_2D, null);Looks like some lines are missing..

🤔

Earlier I mentioned that the mag/minification filtering mode of nearest was not the right choice for this project, and those pictures above show why. Nearest blended some of the lines out of the final screen render, so let's go ahead and fix that by using the linear filter instead.

this.gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_MAG_FILTER, gl.LINEAR);

this.gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_MIN_FILTER, gl.LINEAR);Phew, they're back!

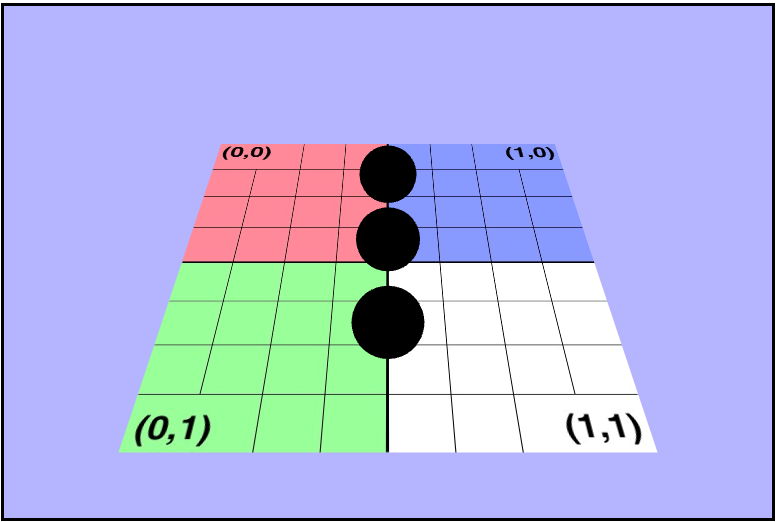

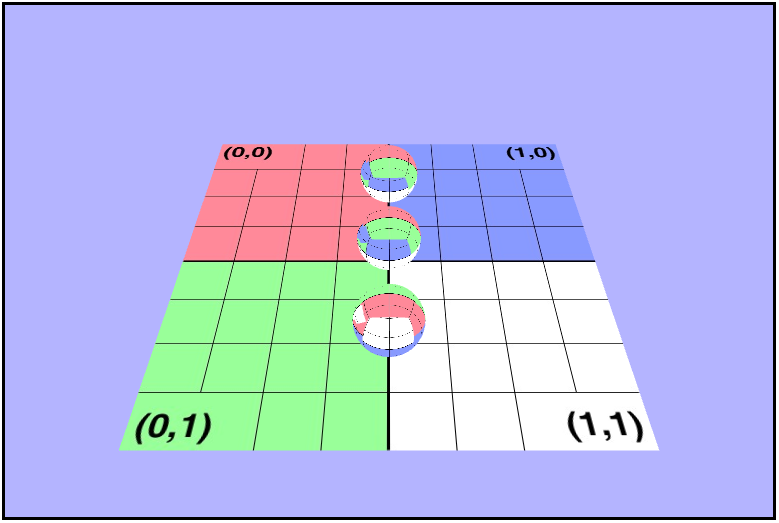

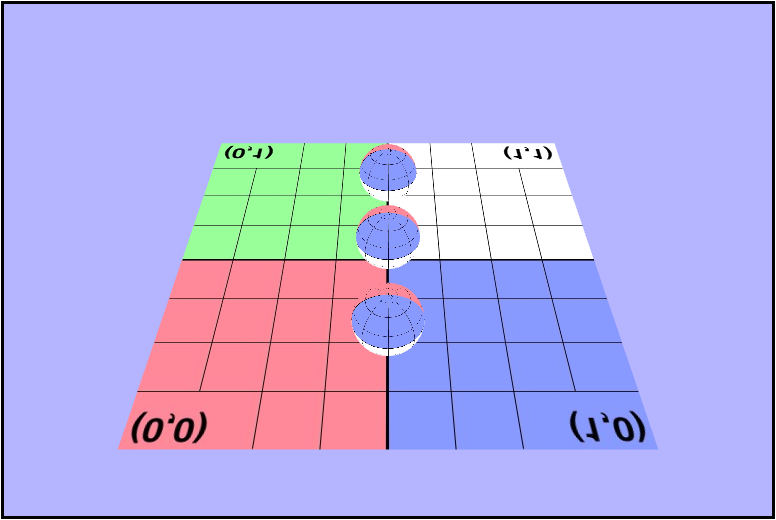

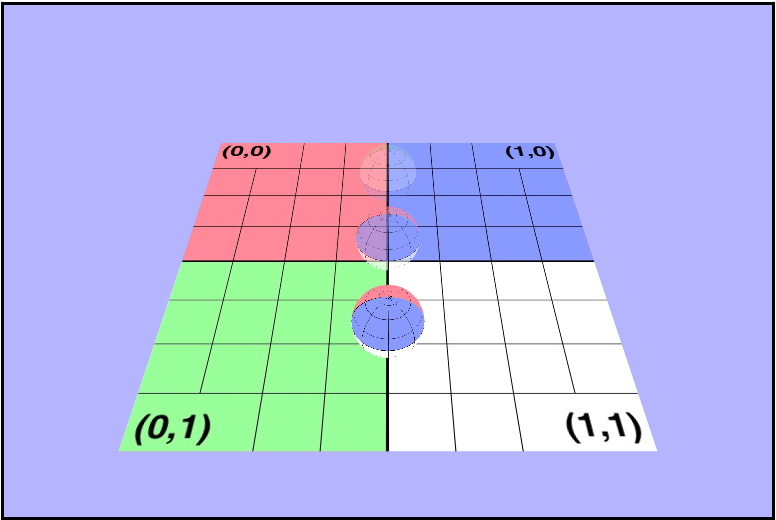

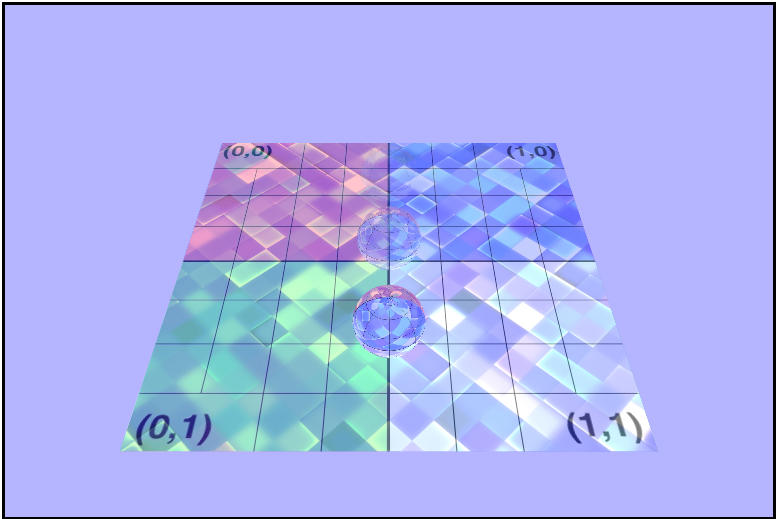

Adding Some Spheres

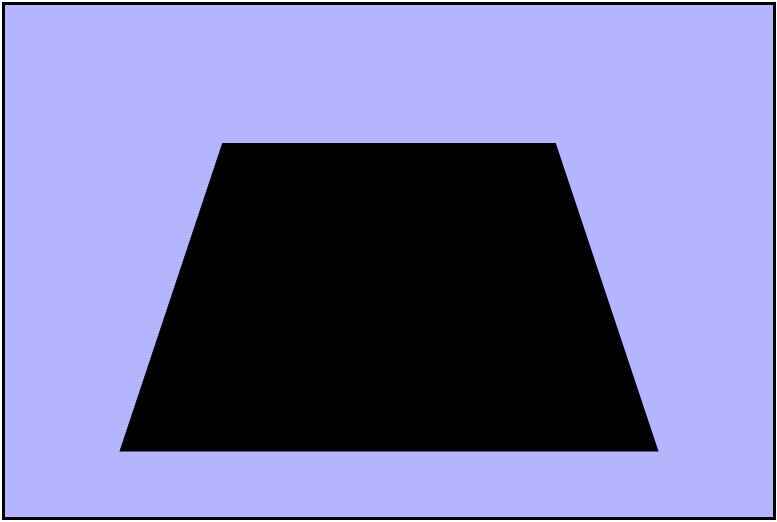

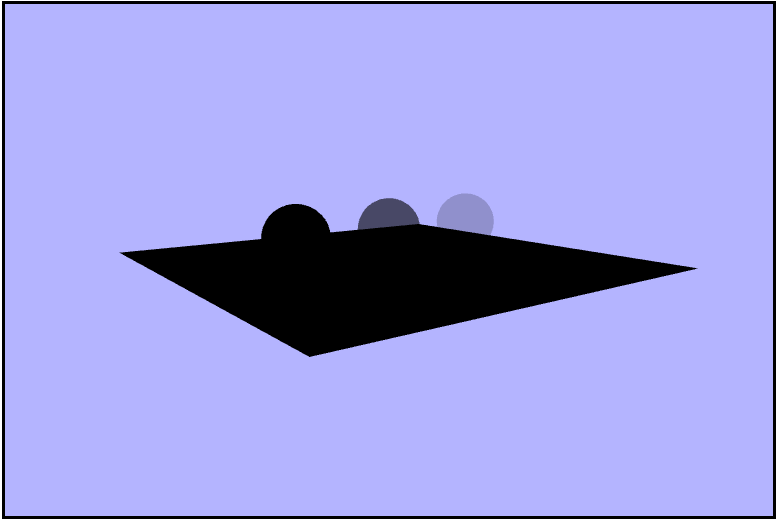

Now it's time to see how we can wrap textures around more complex object, so let's start by adding some spheres to our scene. The geometry was set up for us, so let's just go ahead and render them.

for (var i = 0; i < sphereGeometryList.length; ++i) {

sphereGeometryList[i].render(camera, projectionMatrix, textureShaderProgram);

}

As we can see, they are completely black right now, and that's because we aren't sending the texture data to them. Let's go ahead an do that now, and we can do that in a identical way to how we did earlier with the quad. We can just copy over the code to the create() function of the WebGLGeometryJSON. Once we have set up the texture in the js code, our fragment shader should go ahead and display the texture on the spheres.

There's something (really 2 things) not right here..

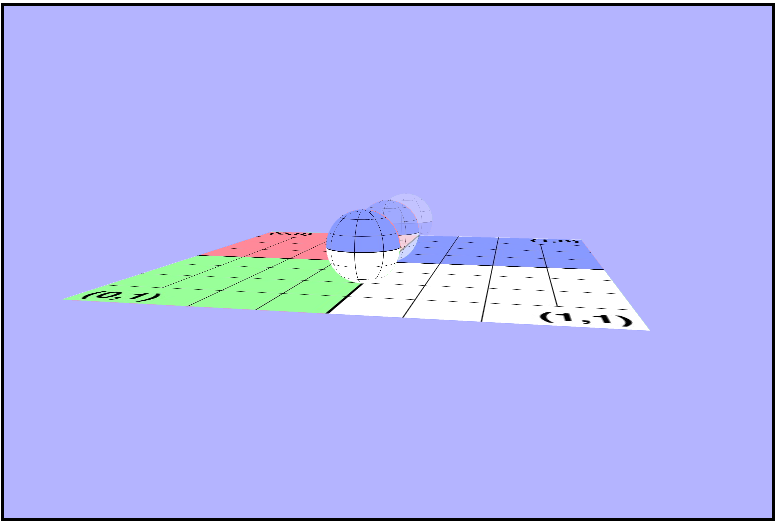

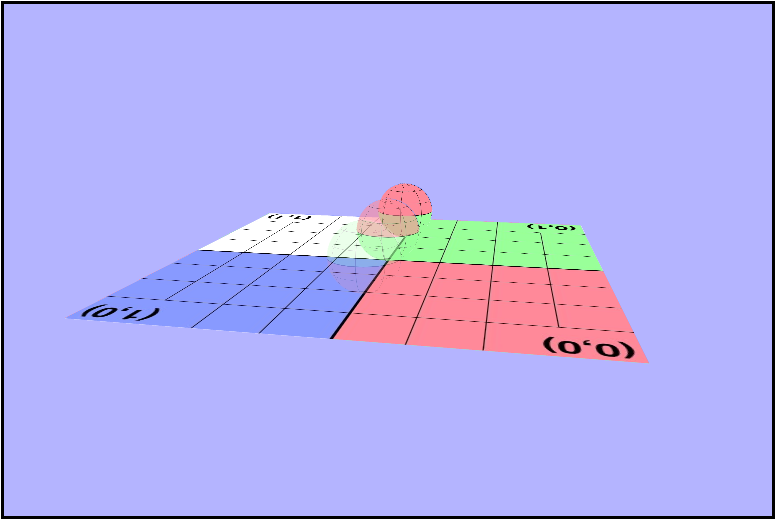

Maybe if we rotate it, we can see what's wrong.

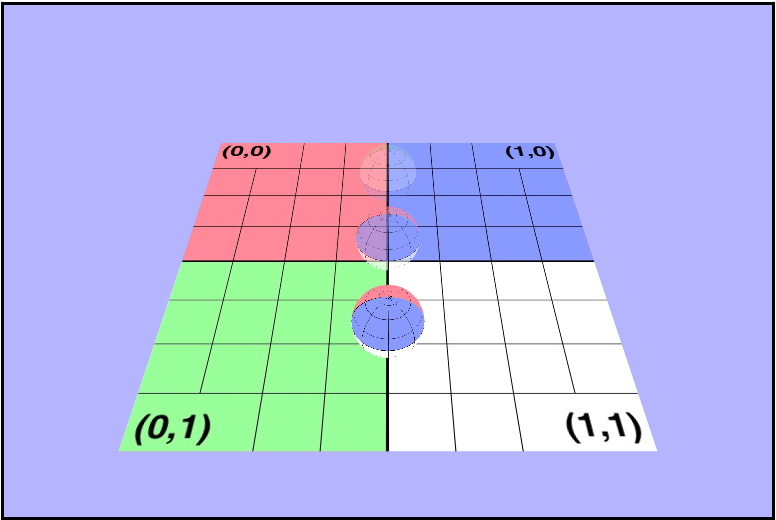

As you may have noticed there's a couple of things wrong with both of those pictures. The first thing that's wrong, is that the texture isn't being rendered in the same direction for all of the objects. In the first picture one sphere is inverted, and in the second the ground is. I'll talk about this one in a bit because it took a while for me (and the wonderful TA Danny) to figure it out. The other is that, we don't seem to be seeing the outside of the spheres, or at least not when they're facing the camera directly. We can see the inside of the sphere. Why are they rendering like this? Well, in order to see we need to determine what we should be seeing. In other words, we need to know which part of the object we want to be visible to use and which object is closest to the camera. We can tell the gl context to render based on distance by enabling the depth test (thru the z-buffer), but that's not all we need. We are also going to have to enable some form of culling. Culling will remove pieces of objects that we don't want to see, so let's take a minute and think about which faces of the sphere we want to be rendered on the screen. It makes sense that we'll want to see the front, right, so we'll have to enable back face culling to remove those unwated faces.

gl.enable(gl.CULL_FACE);

gl.cullFace(gl.BACK);Now they look more like spheres!

But the ground's texture is still flipped..

Working with Alpha

Now that we have our spheres and our ground rendering properly, we're going to go ahead and really make some waves by adding some levels of transparency. In order to do this, we need to enable alpha blending in the gl context, set the blending mode, use the uniform alpha value (instead of the texture's value) in the fragment shader, and disable blending (so the plane doesn't get imporperly rendered). One thing that I feel is important to mention here is that when we originally get the WebGL context, we are sending a WebGL context attribute called alpha. Alpha is a boolean that indicates whether or not the canvas contains an alpha channel. We want to set this to false beacuse it will tell the context not to store any alpha values in the back buffer asnd only RGB making it more efficient for rendering our transparent spheres.

gl.enable(gl.BLEND);

// defines how we want to blend the α

gl.blendFunc(gl.SRC_ALPHA, gl.ONE_MINUS_SRC_ALPHA);

...

// render the spheres

...

gl.disable(gl.BLEND);And then in the fragment shader we want to use our uniform alpha value. Reminder that uniforms are shader variables that remain constant for the entire draw call, so each object will have a constant α. It has also come to my attention after I did this that in GLSL you can swizzle with $xyz$, $rgb$, and $stp$!

void main(void) {

// texture2D returns a vec4 which include alpha, which we don't want

// so we can swizzle (great term) to get the rbg (xyz) values and

// use the alpha we really want

gl_FragColor = vec4(texture2D(uTexture, vTexcoords).xyz, uAlpha);

}Woah! That's translucence alright!

Wait just a second. Why can't I see through the clear ones???

As you can see, while what we did above did seem to make the spheres less opaque, we can't really see thru them. Why? Side note, but "Why?" is the single most important question I have asked during this course because it otfen leads to the "What?" and "How?", and it makes doing these assignments way more fun! Why can't we see thru them if they look translucent? Well, WebGL renders the spheres in the same order everytime, and the clear ones are being rendered first. So what? Remember how we enabled the depth test earlier to render the spheres? Well, it turns out that the depth test is actually messing us up here because the clear one will get rendered as closest and all the other ones will fail the depth test and be culled. The z-buffer is independent of draw order, but the alpha blending is not. We will want to blend the alpha back to front by rendering the objects in that order. ow can we work od this? It just so happens that the Painter's Algorithm is exactly what we need here. The painter's algorithm works by drawing objects from furthest to closest. Basically was we need to do here is sort the spheres based on their distance from the camera on every draw call and render them from furthest to closest. Let's start by figuring out how to get the position of all of our objects including the camera. Each object has a world matrix, and somewhere within that matrix is stored the position.

Looking at the world matrix we see that the right most column contains the translations on each axis, or in other words it contains the displacement form the origin. So to get the position we can return a vector4 of that column, with a $0$ as the $w$ (last) coordinate. This is because we want to interpret the vector4 as as vector and not as a point. Then once we have a way to get the positions of each object, we want to sort based on their distance from the camera. Note we are only sorting the spheres; since the ground is fully opaque it will always be rendered first. I chose to try out the wonders of js and use the array.sort() function to do this, and we can sort on length squared because we don't care what the length actually is, just how it compares to the others'. So in a small optimization attempt let's avoid using the Math.sqrt() function.

/* Apply the painters algorithm to the spheres in the scene and

* sort them by distance (squared) from the camera in descending

* order. (i.e. furthest first)

*/

let paintersSort = () => {

let cameraPos = camera.getPosition();

sphereGeometryList.sort((a, b) => {

// This comparison function returns negative (don't swap) if a > b

// and positive (swap) if a < b. Basically, we are sorting

// backwards. ~ is the logical not operator.

// Looking back at it, it would probably have been clearer to

// just do bLen - aLen instead of ~(aLen - bLen).

return ~(a.getPosition().subtract(cameraPos).lengthSqr() -

b.getPosition().subtract(cameraPos).lengthSqr());

});

}

Now they really are translucent!

Animating the Texture

Now it's time for our $3^{rd}$ installment of Adventures in Fragment Shading 🎉. This one is different from our previous adventures in that we want to animate the shader's position not the color. Instead of chaging the color values recieved from the texture, we want to change the $uv$ coordinates we receive for the texture (based on the seconds elapsed) to give the appearance of scrolling. Below are all changes made exclusively to the fragment shader. The first few I was trying to get my bearings, and I was using the wrong approach and chaging the color values.

Flashing

vec4 tex = texture2D(uTexture + sin(uTime), vTexcoords);

Still flashing, but now its blue!

vec4 tex = texture2D(uTexture, vTexcoords);

tex = vec4(tex.xy, tex.z + sin(uTime), uAlpha);

Closer, but shouldn't be using a trig function

vec4 tex = texture2D(uTexture, vTexcoords + sin(uTime));

Nice and animated, but a bit fast

vec4 tex = texture2D(uTexture, vTexcoords + uTime);

Infinite Gridding

vec4 tex = texture2D(uTexture, vTexcoords * uTime);

Horizontal

vec4 tex = texture2D(uTexture, vec2(vTexcoords.x + uTime / 4.0, vTexcoords.y));

Vertical

vec4 tex = texture2D(uTexture, vec2(vTexcoords.x, vTexcoords.y + uTime / 4.0));

Adding a Second Texture

Our scene right now is awesome, so let's keep going and make it awesome++! Let's add a second texture on top of the $uv$ grid texture!

Let's choose this texture

We can add this texture in the sam way we did for the first one with a few minor changes. First we'll need a second member variable in our object to hold the $2^{nd}$ texture, we'll need a new uniform shader variable to hold the new texture, and we'll need a $2^{nd}$ texture buffer location in the gl context. After we get both textures loaded, we need to average the color values in the fragment shader and we should be all set. Let's take a look...

Is..that..right?

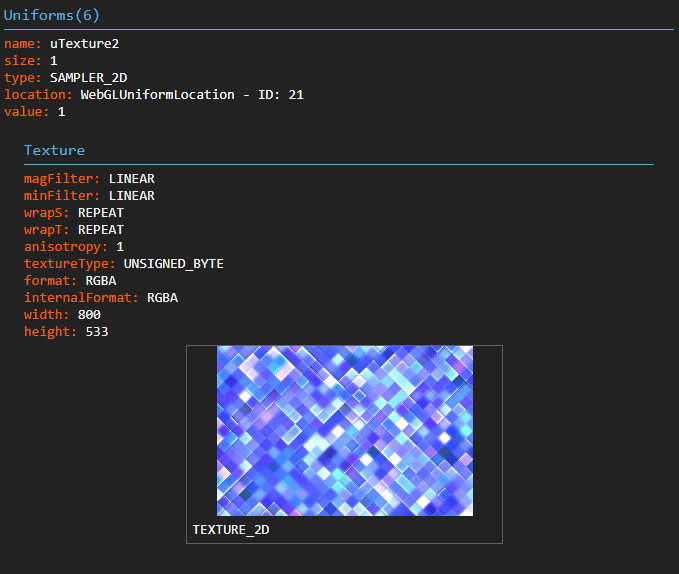

The color's have changed, but it still doesn't look like the textures were layer on top of each other. Let's isolated the new texture and try rendering just that one.

🤨 *shakes fist in angered confusion*

Hold on. Why? There's that quesiton again, and what an interesting one it was. Let's take a look at the canvas' draw frames one at a time using the Spector.js chrome extension. In the frame where the texture is first drawn on the quad we can take a look at the shader state and see that in fact our texture is loaded fine.

😕 🤯

*jaw drops*

Cut to 30 minutes later and I finally look at the dimensions of the new texture. $800\times 533$... WebGL is apparently bamboozled by these non power of two dimensions, and thus just seemingly gives up. So, one more try let's crop the image to be nice powers of $2$ to be $512\times 512$, and we can now hold up our hands in a magnificent glory as the shaders are nicely layerd on top of each other!!!

WOOOOO!!!

Animated

Animating the colors as well

Animating the colors as well

The Great Flip Up

The Great Flip Up

Earlier I mentioned that the textures were being rendered inconsistently and were only sometimes in the right direction. I didn't figure this problem out until after all of the othe code was done, so I figured I'd just explain it at the end. The problem goes back to how I loaded the textures. I was sending the texture data to the newly bound buffer in VRAM immerdiately after binding it and flipping its $y-axis$ afterwards. That apparently sometimes works but is wrong. So by sending the data over to VRAM afterwards thru the gl.texImage2D() function the texture will always render the right way.